The Math Behind the NFL's Decision to Call First Downs with Computer Vision

The days of the chain gang are numbered...

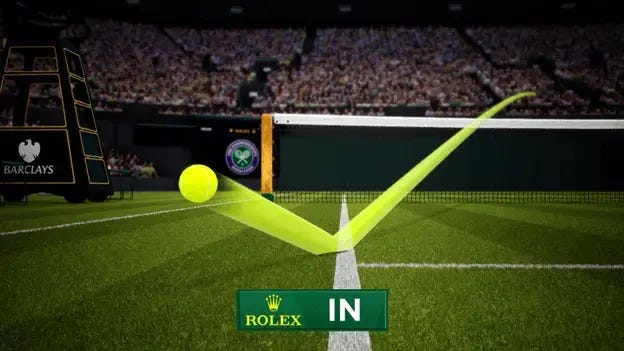

If you’ve watched televised tennis, baseball, or even soccer in the last few years and a close call has just been made by a referee or umpire, a familiar sight has likely graced your screen: an animated recreation of the most recent play appears and the virtual camera zooms in on the critical location being measured: a tennis ball just in or out, a player’s shoulder sticking just past the last defender for an offsides call, or a ball just nicking the corner of the strike zone. The level of accuracy and precision represented in the visualization (and therefore implied measurement system itself) seems to be infinite— it could split a hair accurately if it had to. Of course, if you’re like me, you use this information to immediately scrutinize the utilization of relatively inaccurate human judges and measurements elsewhere in sports.

As it turns out, the NFL must have thought something similar, because earlier this year (on April 1st which just begs for confusion), they announced that starting this season they will use Sony’s Hawk-Eye system to measure first down calls. Watching a referee plant a football on the ground and see two guys run out onto the field with high-viz pylons connected by a chain has long seemed like an intentionally inaccurate way to measure anything let alone something as important as a first down, so I think the change will be welcome by the fans. For better or worse, though, the chain gang might soon officially be a thing of the past, and maybe even 10 years from now it’ll be talked about in a similar breath as other antiquated sports artifacts like leather football helmets and Halloween-style NHL goalie face masks.

Finally, the NFL can move into the modern age and adopt the sub-femtometer ball tracking technology that Wimbledon has employed for years for close shots! Well…. mostly— at least they will measure ball placement with higher degrees of accuracy than a platoon of people trying to manipulate something 10 yards apart by hand. A lot of subtleties lie beneath the surface of the technology used to track ball and player position that culminate in surprisingly poor accuracy (to me at least) that deviates significantly from the levels implied in the animated replays and overlays at most events. To illustrate, the list below highlights some notable recent use-cases for automated ball position tracking and their relative accuracy derived from internal testing by the leagues themselves or academic studies:

MLB Ball/Strike Calling (2020): ±0.25” (on average)

Wimbledon Hawk-eye (2020): ±0.10” (mean error)

FIFA Semi-automated Offsides Technology (2024): Est. ±1.6”

If you’re like me, the relative magnitude of uncertainty in these numbers might be a bit shocking, but it’s not for a lack of trying on the part of the system designers. In the past 20 years or so, companies have explored the use of three main technologies to track ball and player position while continually capturing spatial information over time to derive movement. Each of them has relatively straightforward benefits and drawbacks, and a wide range of relative accuracy:

Global Navigation Satellite System (GNSS): Receivers use satellite constellations like the GPS network to triangulate position outdoors, often to a max accuracy of ~12” at a refresh rate of 10-18 times per second. Low accuracy and low-cost.

Local Positioning System (LPS): Using the same triangulation principal as GPS on a local scale, static ultra-wide band (UWB) radio beacons track in-ball or on-athlete transceivers indoors or outdoors to a max accuracy of ~4” between 10-20 times per second. Medium accuracy and medium cost.

Computer Vision: Both basic and complex analysis techniques including AI are used to analyze camera frames (typically in the visual spectrum) to derive relative positions with the performance highly dependent on the cameras themselves to an accuracy ~0.1” up to 300+ times per second. High accuracy and highly variable (but generally medium) cost.

Even with these 2-sentence summaries, it’s easy to see that the NFL has appropriately decided to pursue computer vision-based techniques for high-accuracy ball-tracking applications. This decision becomes even clearer when considering the very high rates of performance improvement and cost decrease in high-speed video capture and frame analysis tools through the proliferation of machine learning and AI. Interestingly this move also represents a major departure from the LPS product deployed by Zebra Technologies the NFL currently uses to track player and ball position/movement for next-gen stats and activity analysis. Knowing that Zebra’s system only achieves accuracy to ±6” as of 2024 (according to the NFL themselves), it’s clear why they had to find a new solution for higher-accuracy applications. I do still think that they’ll continue to use Zebra’s system for various low-accuracy applications like next-gen stat tracking, but I would not be surprised if the NFL eventually use Hawk-Eye for these functions as well— or at least use it to augment.

I won’t spend more time on GNSS and LPS in this article because the NFL is deploying a computer-vision-based system in Hawk-Eye, but if you want more info about them, I recommend checking out the very first recess article!

Recess #1: What is a Local Positioning System?

Recess articles will typically provide quick insights about salient sports tech news topics as is breaks. To kick off 2025, I’ve cherry picked a technical topic, though to be completely transparent, this Recess is probably longer and more serious than most will be. Enjoy!

It’s worth clarifying at this point that the NFL will only start out by using this system to call first downs (a.k.a. making “line-to-gain measurements”)— they won’t be using the system to actually spot the ball for the measurement. Their primary goal (for now) is to just remove the time-intensive and relatively inaccurate step where a team of linesmen rush onto the field with chains to measure the distance. It’s hard to imagine that the NFL won’t eventually expand the scope and apply this technology to determine the spot of the ball as well. However, beginning with the beachhead use-case of measuring a static ball with clear field of view from multiple cameras represents a great starting place for adopting the technology more broadly. For now the chain gang will still stand on the sideline as a backup to the Hawk-Eye system, but as noted earlier, I think their days are numbered…

Now that we know why the NFL has opted for a computer-vision based measurement product, we need to understand how these systems work (at least at a high level). Most computer vision systems used to track the position of anything in 3D space must have at least three cameras that can see the target, though most systems have more which we’ll discuss in a moment. Operators “calibrate” these cameras as carefully as possible by correlating individual pixels or sets of pixels in the frame to known, fixed locations in the field of view and recording the position of the cameras themselves. Within the frame of each camera, systems typically detect the target object (or objects) by screening for specific color values and/or color changes between pixels in the field of view, or leverage object detection algorithms like “You Only Look Once” (YOLO). A well-calibrated system can measure the number of pixels (effectively the distance) between the target object and the known calibrated points, and multiple cameras can be used to triangulate the position of an object in 3D space.

Critically, all of the images from each camera need to be captured at the same exact moment in order have high confidence in the position of the object, otherwise you are trying to analyze contradicting images. Due to timing fluctuation and other factors like the need to estimate object position between captured frames, camera shaking, and imperfect calibration, systems typically don’t produce a perfectly precise object location estimates at all times, so you must use advanced algorithms and incorporate additional redundant views to lower the 3D envelope in which the ball may be located. Additional information like the size of the object in a frame, and its known shape can assist in making the position estimation more accurate. To measure the actual velocity (speed and direction) of the object, you then need to compare it's position in multiple frames to see how far it traveled and in what directions in 3D space.

Hopefully this high-level system and process description elicits some initial thoughts about what technical specifications might make a computer-vision-based ball tracking system more or less accurate. Beyond the number of cameras used and the quality of the algorithms they use to reduce the uncertainty of the ball location in space, the underlying performance and location of the cameras themselves really determines the theoretical maximum performance of a vision-based object tracking system. Thankfully, we really just need four key pieces of information about the cameras themselves, and one about the object(s) being tracked to determine the theoretical baseline accuracy of a system measuring the position and velocity of an object:

The combination of camera resolution and field of view help us determine one of the most critical baseline pieces of information, which is how much physical distance each pixel represents. The resolution of the camera determines how many pixels there are in the x- and y-directions, and the relative position of the camera plus lens focal length determine how much physical distance falls within the “box” of one single pixel— effectively your smallest increment of measurement. Understanding the lens type itself further helps system accuracy by allowing it to account for visual distortion and parallax effects— effectively how the contents of a single pixel change relative shape and scale based on where they land on the sensor (though this is a bit less critical for overall performance). In effect, the combination of these three specs alone enable a basic understanding of the theoretical maximum accuracy and precision of the system in estimating object position.

On top of the spatial resolution for identifying location within one frame from multiple cameras, the actual frame rate of the camera systems are critical for multiple reasons (assuming they are perfectly synced in time). First, capturing more frames per second enables you to have precise measurements of ball location without needing to estimate its travel in between frames, which introduces error. For example, the MLB’s Hawk-Eye system watching the players only captures 50 frames per second (FPS), while they use high-speed cameras at 100 FPS (or higher) to track 90+ MPH pitches with the aim of having a high-accuracy ball position the moment the ball cross the plate. If you don’t have a perfect image of the ball in 3D space at the critical moment like when it crosses the plate or hits the tennis court line, you need to estimate its position at that point. Having higher frame rate cameras increases the odds of getting a perfect set of camera shots at the critical moment or helps reduce the amount of interpolation required for estimation.

Similarly, frame rate also helps determining the accuracy of ball velocity estimation. By more frequently measuring the distance the object has traveled in between frames, you can shrink the uncertainty about the speed of the object itself. As an extreme example, in theory, a fast-moving object could pass completely through a narrow field of view of a camera in between captured frames and completely evade detection! This is unlikely to occur in most sports due to the tracking camera’s wide field of view, but maybe you only get one shot or two shots of the ball as it’s flying towards home plate and it’ll still be extremely difficult to estimate the pitch speed because the ball could enter the glove in between frames at different times!

This transitions us to the final critical piece of info we need to track an object with cameras: the size of the object being tracked relative to the field of view of the camera. In short, tracking smaller objects is more difficult because they occupy fewer pixels so their location and shape is more difficult to determine— whether its a ball like our focus so far, or even a human! Again, it’s useful to think in an extreme case, where something like a dart would be impossible to track with a non-HD camera mounted in the rafters of a stadium. As you increase the relative size of an object, it becomes easier to detect and to track with the use of computer vision systems. I won’t go into detail on how a ball compresses and changes shape to affect position estimates, but this secondary effect is critical in sports like tennis and golf where the ball undergoes significant shape change during play.

With all this high-level info in-hand, let’s dive into an estimate about how accurate the NFL’s new Hawk-Eye system will be! To do this, I’m going to boil the estimate down to the simplest terms possible, which means removing some secondary specs like the size/shape of the ball and the camera lenses being used, though these would become important for a more detailed analysis. Thankfully, the NFL is trying to measure the position of a stationary ball, so we can remove frame rate from our equations as well, though it would be critical for tracking motion.

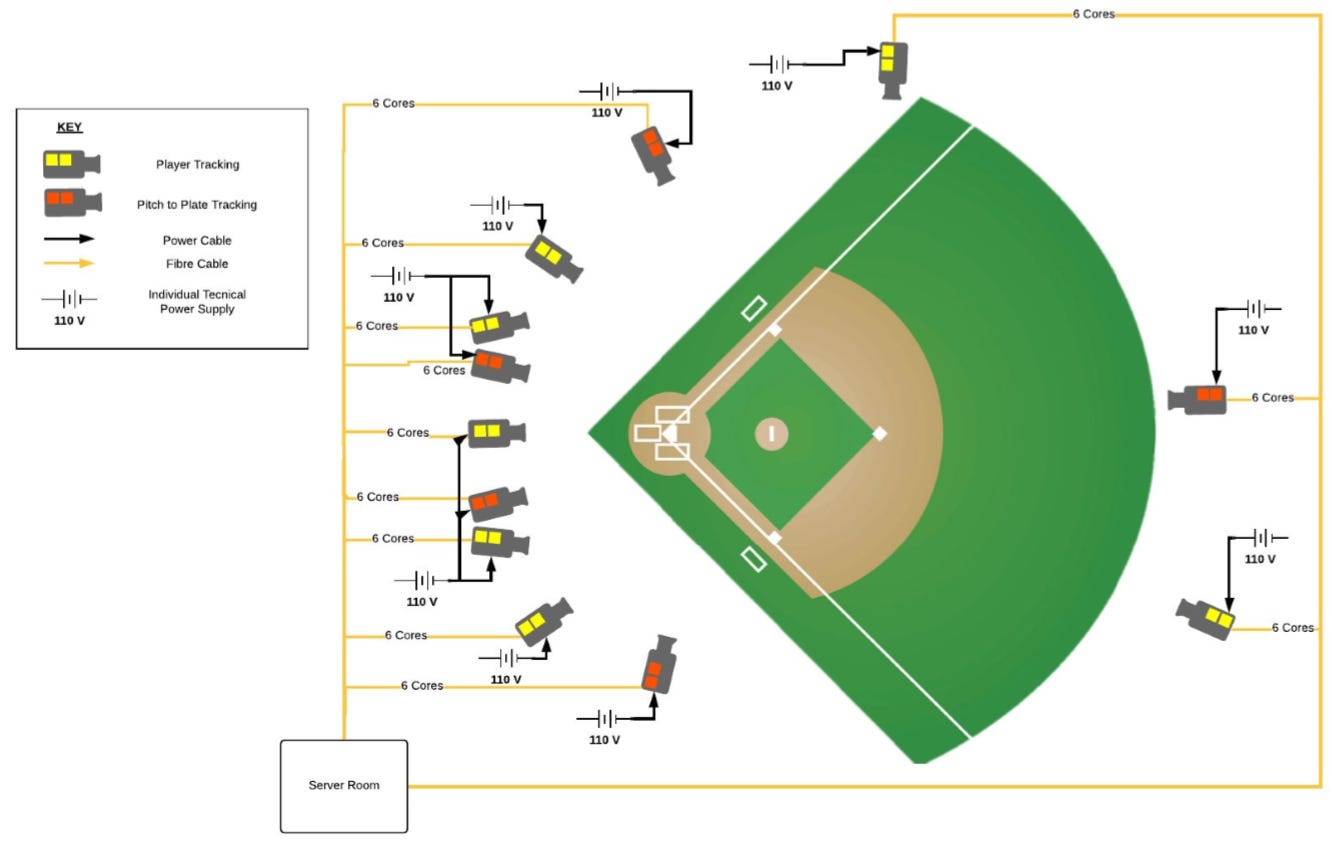

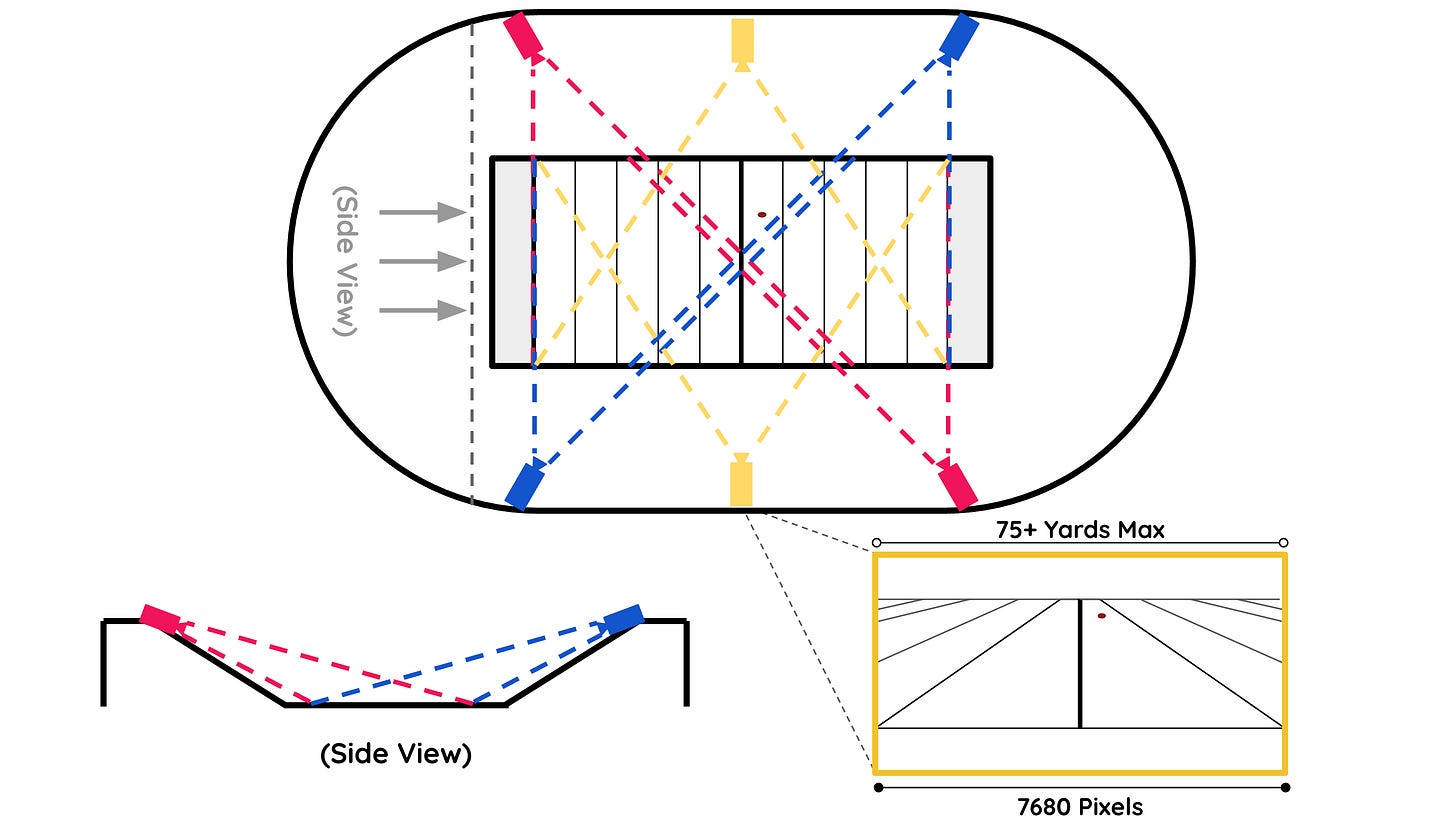

In one of its press releases, the NFL revealed some limited details about the new Hawk-Eye deployments, including that the system will comprise six (6) 8K cameras throughout each NFL stadium. Given the desire to mount the cameras in relatively consistent locations in each of the widely-varied stadiums, I imagine that the cameras will be situated not directly above the field, but in a ring pattern around the stadium at a high elevation pointed at an angle downwards. Given there are only six cameras, I think it’s reasonable to estimate that each camera covers a maximum horizontal distance of 75 yards so that their views overlap from both sides of the field length-wise. In the levels of accuracy we’re attempting to achieve, we should translate this distance to 2,700 inches of field width in the frame of each camera.

An 8k camera has a 7,680 horizontal pixels in the frame, so if we’re assuming that this horizontal distance is comprised of the field length (a very simplifying assumption), we can simply divide the width of the view (2,700 inches) by the number of pixels (7,680) to get an estimate of our theoretical accuracy (and precision), which turns out to be roughly 0.35”, though in certain areas of the field it may be higher or lower. This means that the overall camera system is unlikely to be able to make deterministic decisions within this margin, though the NFL may choose to do so. Even with relatively high-level (and generous) assumptions about the camera views, this seems like a reasonable estimate based on the other examples of systems in other leagues provided above.

It turns out that the NFL themselves actually said in an ESPN interview they expect the new system to be accurate within “half an inch”— not far off from our initial estimate! It’s also not as accurate as we’ll probably be led to believe during its utilization. In turn, this raises a critical set of questions: 1) how does the NFL improve the performance over time, and 2) how do they overcome challenges to expand the use cases for the system?

The first question is, I think, relatively straightforward to answer at a high level: they can continue to invest in Sony’s Hawk-Eye system (admittedly already the gold standard in the industry) with the goal of improving its performance. Improvement could come from improved camera resolution, number of cameras, and even camera placement within the arenas. There will of course be a technological and cost limit in these pursuits, as even 8K closed-cameras can cost more than $8,000 a piece, and increasing resolution to 16k would be extremely expensive today. Plus, when you add additional data streaming from the cameras themselves, you have to add higher-bandwidth networking and processing capabilities as well, which adds additional costs. Herein lies a key trade-off between cost and accuracy.

It’s also worth noting that increased camera resolution or reduced spatial coverage will only make the existing system more accurate when the ball is viewed clearly by multiple cameras, and is reliably captured in the critical position by the frames (as it is when the ball is stationary for first down markings). However, improved camera specs don’t particularly help when tracking a ball moves during play— at which point frame rate becomes important as well. A football player can run at a max speed of ~20 miles per hour, which is ~350 inches per second. At this speed, even a 100 FPS camera is seeing the ball once every 3 inches, and will need to make interpolated estimates that may not be as accurate as desired. In addition, those cameras might not even be able to see the ball at all for an extended period of time due to occlusion by players or refs.

Given this major line-of-sight issue for visual systems tracking football specifically, it’s easy to see why the NFL initially chose LPS-based athlete and ball-tracking system with Zebra: it doesn’t need line of sight to work! The UWB radio frequency can travel through bodies and other objects (like pads) to detect the location of a transceiver. This fact alone makes me think that some sort of hybrid system that combines computer vision and an additional sensing modality like UWB radio might eventually be a winning pursuit for the NFL in the long run if they truly want to move from just calling first downs to actually spotting the ball itself on every play. However, I do think Zebra and Hawke-Eye would need to brainstorm some new system arrangements for the location of the cameras and the beacons/transceivers for the LPS devices, perhaps on the sidelines or under the field for the latter. I could even see them potentially developing some new predictive algorithms for the motion of a ball within an obscured pile (and call it “pile mechanics”).

All-in-all, I have a lot of optimism about the NFL’s ability to eventually deploy a ball-tracking system that can estimate the position of the ball at all times during a game. Hopefully after this dive into the existing system and its accuracy characteristics, you share that optimism, but also carry a fair amount of skepticism about their broader implicit accuracy portrayal. The computer vision systems used today are already better than humans at judging “close calls” throughout the world of sports, and while they have a ways to go to live up to the accuracy and precision shown in modern animated replays, how they perform now is the worst they will be moving forward.

I for one can’t wait for the day when we get the first semi-automated offsides/tennis in-out style animated replay showing a player’s knee touch the ground and whether or not the football crossed the 1st down or end-zone plane. It sounds like a crazy future, but we’ll get there eventually, and once we do, we can once again ask the key question: how accurate is this system relative to how it’s being portrayed?

Also, if you want to have a good laugh, check out Sora’s first crack at generating this type of animated replay (graphic design is my passion):